Speech To Insights: Building A Real-Time Transcription App With Azure Speech-to-Text and RAG

The integration of real-time transcription with Retrieval-Augmented Generation (RAG) is revolutionizing how we interact with AI-powered applications. By providing users with accurate, real-time transcriptions and generating context-aware responses to queries, you can deliver a dynamic and highly personalized experience.

In this blog, we’ll walk through building a real-time transcription app using MediaRecorder and the Azure Streaming API and explore how to enhance it with RAG functionality. We’ll also discuss the potential of using alternative generative models to OpenAI for query response generation, offering more flexibility and control.

Real-Time Transcription: How It Works

The transcription part of the app involves capturing audio in real-time using the MediaRecorder API in the browser and then streaming this audio to the Azure Streaming API for transcription. This setup ensures that users receive transcriptions as they speak, with minimal delay.

Steps to Implement Real-Time Transcription:

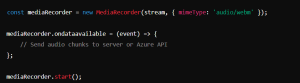

- Capture Audio Using MediaRecorder: The MediaRecorder API allows you to capture audio from the user’s microphone in real-time and stream the audio chunks to your server or directly to an API.

- Stream Audio to Azure’s Speech-to-Text API: Azure Speech-to-Text API provides robust real-time transcription capabilities. You send the audio captured via MediaRecorder to Azure’s API and get back transcription results almost instantaneously.

- Display Real-Time Transcriptions: Once you receive the transcriptions from Azure, you can display them in the app UI, allowing users to see their speech converted into text in real-time.

Adding RAG to Your Real-Time Transcription App: Enabling Query-Based Outputs:

Now that your real-time transcription app is up and running, you can add RAG functionality to let users ask questions or issue commands based on the transcribed text. This will combine the power of retrieval with intelligent generation, delivering more informed and contextually relevant responses.

Key Steps to Implement RAG:

- Retrieve Relevant Data: Use a retriever component that can search relevant documents or databases based on the transcribed query. The retriever can use techniques such as Dense Passage Retrieval (DPR) or BM25 to fetch relevant documents or passages.

- Generate the Response: Instead of relying on OpenAI’s GPT models, you can use open-source alternatives for the generation part of RAG. These alternatives provide flexibility by allowing you to run models locally or on specific platforms, without being dependent on proprietary APIs.

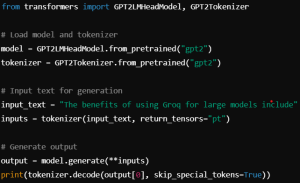

Using GPT-Model: GPT-2 is a powerful language model that generates coherent text based on given input.

Why Use GPT-2?

- Versatile for different text generation tasks.

- Available in multiple sizes for different performance needs

Using Non-OpenAI Models for Generation

Here are some noteworthy open-source models you can use:

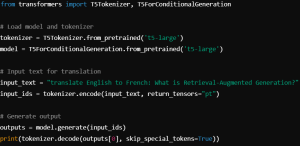

1. T5 (Text-to-Text Transformer): T5 converts all NLP tasks into a text-to-text format, making it versatile for tasks like translation and summarization.

Why Use T5?

- Handles various NLP tasks with ease.

- Available in different sizes for speed and accuracy.

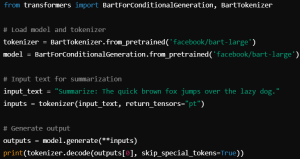

2 .BART (Bidirectional and Auto-Regressive Transformers):

BART is highly effective for tasks such as summarization and question answering. It can generate text based on retrieved information and is particularly strong in producing structured outputs.

Why Use BART?

- Generates coherent and accurate text outputs.

- Ideal for structured tasks.

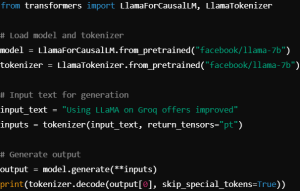

3.LlaMA (Large Language Model Meta AI):LlaMA is an efficient, open-source model designed for high-performance text generation.

Why Use LlaMA?

- Low latency for real-time applications.

- Capable of handling complex queries

Deployment Considerations: The Role of AI & Data Science:

- Choosing the Right Infrastructure: For real-time transcription and RAG generation, ensure low-latency infrastructure.

- API Integration: Build an API layer that interfaces with the transcription and retrieval systems.

- Latency Optimization: Real-time transcription and RAG require low-latency systems to ensure minimal delays.

Conclusion

Integrating real-time transcription with Retrieval-Augmented Generation (RAG) can lead to a highly interactive and dynamic user experience. Exploring alternatives to OpenAI models, such as GPT-2, T5, or BART, offers increased flexibility and control, particularly regarding deployment and cost management.

With the right infrastructure and model selection, scalable, low-latency applications can be deployed efficiently to meet diverse user needs.

Looking to implement AI & DataScience solutions? Contact PIT Solutions for expert guidance.